TimeGrad Using Diffusion Model¶

Rasul et al., (2021) proposed a probabilistic forecasting model using denoising diffusion models.

Autoregressive¶

Multivariate Forecasting Problem

Given an input sequence \(\mathbf x_{t-K: t}\), we forecast \(\mathbf x_{t+1:t+H}\).

See this section for more Time Series Forecasting Tasks.

Notation

We use \(x^0\) to denote the actual time series. The super script \({}^{0}\) will be used to represent the non-diffused values.

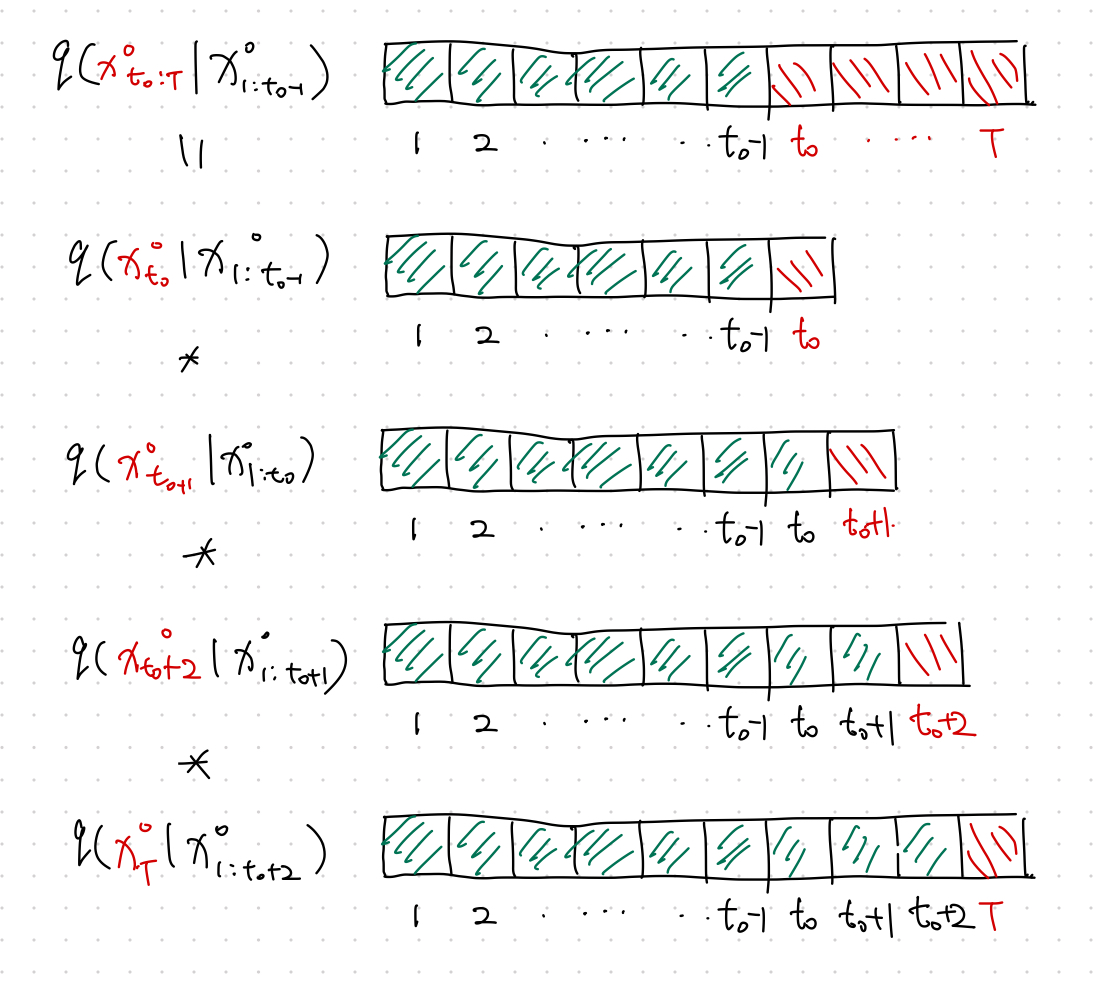

To apply the denoising diffusion model in a multivariate forecasting problem, we define our forecasting task as the following autoregressive problem,

At each time step \(t\), we build a denoising diffusion model.

Time Dynamics¶

Note that in the denoising diffusion model, we minimize

The above loss becomes that of the denoising model for a single time step. Explicitly,

Time dynamics can be easily captured by some RNN. To include the time dynamics, we use the RNN state built using the time series data of the previous time step \(\mathbf h_{t-1}\)1

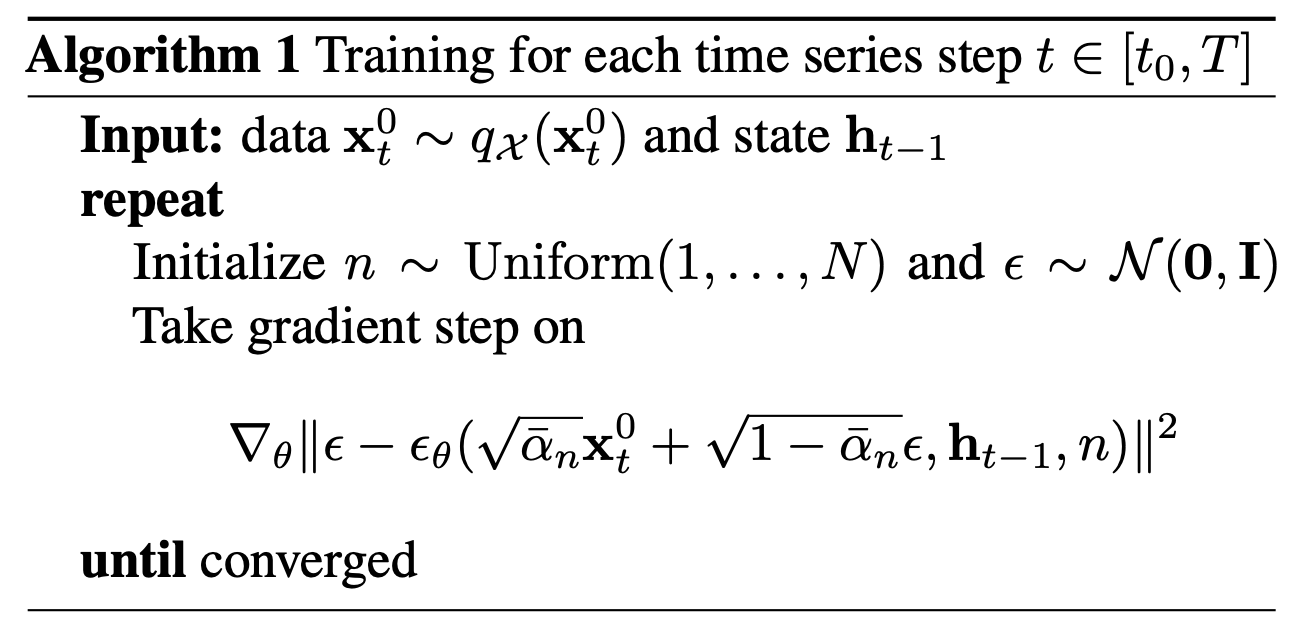

Apart from the usual time dimension \(t\), the autoregressive denoising diffusion model has another dimension to optimize: the diffusion step \(n\) for each time \(t\).

The loss for each time step \(t\) is1

That being said, we just need to minimize \(\mathcal L_t\) for each time step \(t\).

Training Algorithm¶

The input data is sliced into fixed length time series \(\mathbf x_t^0\). Since Eq \eqref{eq:ddpm-loss} shows that a loss can be calculated for arbitrary \(n\) without depending on any previous diffusion steps \(n-1\), the training can be done by both random sampling in \(\mathbf x_t^0\) and \(n\). See Rasul et al. (2021)1.

How to Forecast¶

After training, we obtain the time dynamics encoding \(\mathbf h_T\), with which the denoising steps can be calculated using the reverse process

where \(\mathbf z \sim \mathcal N(\mathbf 0, \mathbf I)\).

For example,

It is Probabilistic¶

The quantiles is calculated by repeating many times for each forecasted time step1.

Code¶

An implementation of the model can be found in the package pytorch-ts 2.

-

Rasul K, Seward C, Schuster I, Vollgraf R. Autoregressive Denoising Diffusion Models for Multivariate Probabilistic Time Series Forecasting. arXiv [cs.LG]. 2021. Available: http://arxiv.org/abs/2101.12072 ↩↩↩↩

-

Rasul K. PyTorchTS. https://github.com/zalandoresearch/pytorch-ts. ↩